From Wikipedia

“Augmented reality (AR) is a term for a live direct or indirect view of a physical, real-world environment whose elements are augmented by computer-generated sensory input such as sound, video, graphics or GPS data. It is related to a more general concept called mediated reality, in which a view of reality is modified (possibly even diminished rather than augmented) by a computer. As a result, the technology functions by enhancing one’s current perception of reality. By contrast, virtual reality replaces the real world with a simulated one.

Augmentation is conventionally in real-time and in semantic context with environmental elements, such as sports scores on TV during a match. With the help of advanced AR technology (e.g. adding computer vision and object recognition) the information about the surrounding real world of the user becomes interactive and digitally manipulable. Artificial information about the environment and its objects can be overlaid on the real world. The term augmented reality is believed to have been coined in 1990 by Thomas Caudell, working at Boeing.[1]

Research explores the application of computer-generated imagery in live-video streams as a way to enhance the perception of the real world. AR technology includes head-mounted displays and virtual retinal displays for visualization purposes, and construction of controlled environments containing sensors and actuators.”

[macme title='Augmented Reality on Wikipedia' id='44']

This is a very technical definition of Augmented Reality. In our practice we have seen how multiple definitions actually exist of concepts, ideas and practices that can be conveniently led-back to the idea of the Augmentation of Reality from the points of view of Psychology, Anthropology, Cognitive Sciences, Architectures and, of course, technology and design.

Central to the idea of Augmented Reality (AR) is the idea of creating layers of digital information/vision/sound/interaction/experience which are seamlessly and coherently overlaid to the “ordinary” physical reality, creating a new space in which digital and analogue are interconnected and interrelated.

The most common approach to AR is a visual one. “Digital things” visually layered onto “Analog things”, creating a new “AR Thing” which is seamlessly analog-digital.

Possibly two of the most interesting parts of the concept of Augmented Reality are:

- the idea of creating a world which is analog-digital

- the idea of creating a reality which is a framework that is able to host multiple layers-of-reality at the same time

[macme title='Ubiquitous Computing on Wikipedia' id='45']

[macme title='Location Based Services' id='46']

[macme title='Cross Media' id='47']

“The real-time city is now real! The increasing deployment of sensors and hand-held electronics in recent years is allowing a new approach to the study of the built environment. The way we describe and understand cities is being radically transformed – alongside the tools we use to design them and impact on their physical structure.”

[macme title='The Senseable cities lab at MIT' id='48']

[macme title='Wiki City at MIT' id='49']

“This platform enables people to become distributed intelligent actuators, which pursue their individual interests in cooperation and competition with others, and thus become prime actors themselves in improving the efficiency of urban systems.”

Therefore we see in this case, as well, the idea of layering digital information onto the analogue space, to create new opportunities for expression, interaction and action, suggested by the novel forms of awareness and by shared knowledge processes which we can enact in urban and rural spaces.

This is indeed an Augmented Reality, even if it does not comply with the “standard” technical description of AR. There are no “3D objects visually integrated onto the real world view”, yet reality (our perception of it) is truly augmented, with the possibilities for perception and cognition. While walking in the streets we are able to know more about the city than what our analog senses allow us for: we can have the availability of a new eye, or ear, which are sensible to information about the environment, pollution, energy use, social issues. And we can use this new awareness to act/react, modifying our behaviours and adopting more active models for our presence in the world.

All these concepts promote an additional one, which is the second idea we highlighted above: the possibility to create and make accessible a multiplicity of points of view.

When anthropologist Massimo Canevacci speaks about the Polyphonic Metropolis he speaks about a possibilistic scenario. Here the city becomes an intense dialogue among multiple dissonant voices that create the environment in which we live. This is a cultural process in which humanity is described as a constantly mutating network which creates multiple topographies for space and time which simultaneously harmonize and de-harmonize, creating the state of creative noise in which we live. In this vision, we constantly move from analog to digital domains, and the ubiquity of technology makes these transitions progressively les identifiable, proceeding towards a scenario in which digital and analog mix together to form something new. In this process the city changes, the body changes, our life processes change. The city becomes the communicational metropolis, the body becomes the body-corpse, and processes turn into communication, visual interaction, material-immaterial relations performed across flesh and bits.

The infoscape rises to replace the classical idea of landscape, describing the mutual interaction between digital data and the materials which build the analog part of reality, becoming a novel form of vision on space, time and relations. Digital information and communication as new building materials for our cities, as a new opportunity for architecture, as a new space for cognition.

[macme title='Gregory Bateson' id='50']

[macme title='Arjun Appadurai' id='51']

The idea of infoscape is particularly interesting from anthropological points of view, as it represents the cultural dynamics which have been increasingly interesting to anthropologists and ethnographers since the researches of Bateson, Appadurai, Mead, Clifford, Geertz and other fundamental researchers of cultures, languages, behaviors and relationships among human beings, with radical impacts on a diverse set of disciplines and domains such as politics, economics, business, sciences and arts who are now, in the contemporary era, starting to deal with the substantial mutation of networked, connected human beings.

[macme title='Margaret Mead' id='52']

[macme title='James Clifford' id='53']

[macme title='Clifford Geertz' id='54']

These approaches suggest the multiplicity of cultures and their mutual interconnection and inter-influence, representing emergent, dynamic networks which constantly describe our human environment. In this vision we can imagine the world as a stratified reality in which each layer interacts and mutates each other layer, and in which each human being benefits from his/her own perspective on this stratification, which constitutes itself a layer in the overall emergent scheme. This idea is in perfect synch with the idea of Augmented Reality, through which we are actually able to materialize and make accessible such a model using digital tools: a multilayer, hyperlinked space in which anyone can publish a point of view and in which all points of view can be hyperlinked to each other in dynamic, mutating, liquid ways.

This is exactly what will be done during the RWR workshop: research on the new models for communication and interaction with the world suggested by these approaches; research on new forms of narratives and relation patterns which these technologies/methodologies suggest; understand how to use technologies to enact performative, poetic, political, business, artistic, activist layers of reality; learn how to express our point of view using ubiquitous publishing practices in free, autonomous, self-determined ways; learn how the possibility to ubiquitously publish information, ans narratives, and to create ubiquitous opportunities for communication and interaction enables new models for business, art, ecology, urban development, culture, knowledge and, most of all, awareness and human interaction.

Using this idea of what can be considered as an augmentation of reality, we can proceed to the next steps.

The idea is to design and implement a product which activates some of these possibilities. The idea is to create a new narrative in which multiple points of view are expressed in a space, and to interweave these points of view with realtime information, to create a narrative infoscape which enables us to experience a potentially infinte numbers of perspectives on reality by walking through the space itself. We will create an Augmented Reality Movie.

[macme title='FakePress Publishing' id='55']

architectures made of information, interconnection and relation

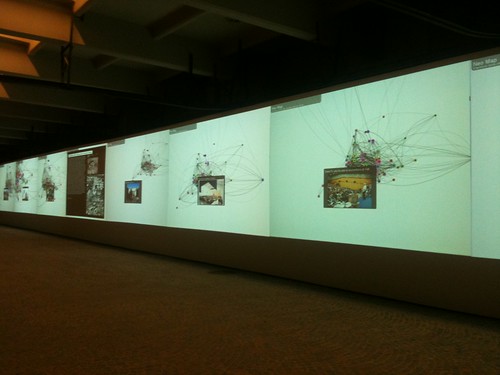

ConnectiCity transforms the architectonic surfaces of cities into a new layer of reality built through communication, information and the visions of people. Launched for the first time in Rome, at the Festa dell’Architettura organized by the city administration and the Order of the Architects under the form of the “Atlante di Roma”, ConnectiCity has been implemented in multiple versions. Each version allowed for the transformation of the architectural surfaces of the city into a digital conversation sphere. Information gathered in real time from social networks, databases of the visions of citizens on the city, instant visualizations of data regarding the city, its energetic, transport, social profiles. The city narrates other stories found in data, in the interconnections of people, their dreams and possibilistic perception of the place they live in, and in the life of the city itself, as gathered from sensors and systems.

ConnectiCity fosters a new way to percieve the city, including new kinds of citizenship: more informed, active and participatory.

The instances of this project have been widely appreciated by researchers, artists and public administrations, and are currently featured in scientific papers and artistic festivals.

[macme title='ConnectiCity at AOS' id='56']

[macme title='ConnectiCity at FakePress' id='58']

[macme title='the Atlas of Rome' id='59']

augmented reality to create squatted communication practices inside supermarkets

Products have incredible and complex stories, made of transportation, production, people, places. Yet products (and supermarkets) fail in showing the enormous richness that characterizes their production and distribution. Products’ labels and supermarket communication are the vehicle for a very limited set of points of views and voices. Squatting Supermarkets addresses this situation by creating a free, autonomous layer of information on products and supermarkets using augmented reality.

Squatting Supermarkets uses the logos and packaging graphics of products as AR fiducial markers. A content management system allows attaching information and interactive experiences to logos, instantly transforming them into open, accessible, emergent spaces for communication. A mobile application allows consumers to use their smartphones to take pictures of the logos on the products’ labels and to use them to access the augmented reality layers of information.

Presented for the first time at the Piemonte Share Festival in 2009, at the Museum of Science in Turin, Squatting Supermarkets has been shown multiple times internationally transforming products into a tool for ubiquitous storytelling, narrating the stories of the people, places and events that characterize their lives.

Squatting Supermarkets has been used as an innovative publishing system, as an art performance, as a tool for scientific research and as an instrument for social entrepreneurship.

[macme title='Squatting Supermarkets' id='60']

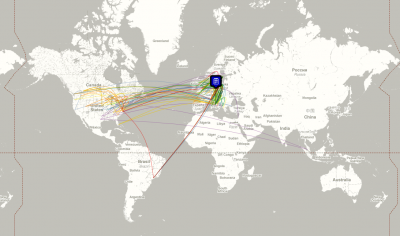

a global publication on the emotions of people

Presented at the 2011 edition of the Transmediale Festival, CoS, Consciousness of Streams is an ubiquitous publications with more that 60000 authors (estimate on April 2011). CoS produces a realtime global emotional map of the world. Thousands of people currently use their mobile phones, web interfaces and dedicated disseminated interfaces to describe their emotional feeling about the place they live in. A global emotional map emerges, describing new geographies that are not built through roads and buildings, but through emotional scapes and the stories of people.

[macme title='CoS, Consciousness of Streams' id='61']

[macme title='Participate to CoS' id='62']

[macme title='info on CoS' id='63']

[macme title='more info on CoS' id='64']

[macme title='CoS at Transmediale' id='65']

Augmented reality for Leaves

Leaf++ is an Augmented Reality System that allows creating AR interfaces using the leaves of the trees.

Add your favourite leaf to the system and you can use it as a AR Fiducal Marker through which you can attach information and use it as a tool for gestual interaction. Presented at ISEA 2011 in Instanbul.

[macme title='Leaf++' id='66']

Conference Biofeedback

tools for the next-steps of education

Conference Biofeedback is a wearable device that allows to transform conferences and lessons into a new form in which multiple voices can be expressed. A wearable circuits directly connects the body of the presenter to the emotional states of the audience. Members of the audience can use interfaces on their mobile phones and laptops to describe their emotional state while listening to a conference or participating to the lesson.

When negative emotion levels bypass certain predefined levels, several physical stimuli start happening on the presenter’s body, from sounds and flashing lights, to low current electrical shocks. This extreme implementation points out a possibility: the possible innovation scenarios in education and communication processes which it is possible to implement to foster direct emotional connections among all parties involved, thus obtaining deeply collaborative practices and new perceptions and forms of awareness.

[macme title='Conference Biofeedback' id='67']